The Cognitive Bias Checklist

Cognitive biases are mental shortcuts hardwired in your brain that, in the past, helped your ancestors to survive. Although these mental heuristics might have been useful then, today they lead to many errors, sometimes very grave errors.

This post is a non-exhaustive checklist of some biases I think are useful to know. I also try to provide an example of the bias and some questions you might ask yourself to (hopefully) increase your likelihood of dodging it.

Keep in mind, these aren’t blanket statements. Biases are tendencies we have, not something that happens 100% of the time. Also, some of the names of these biases might not be what you call them. Some of them might not even have “bias” in the name. That’s ok. I’ve likely renamed a few just for my own personal taste.

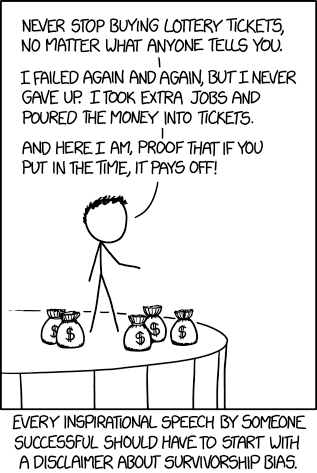

Survivorship bias

You draw conclusions from things that made it pass some filter and ignore the things that didn’t

In WWII, the US wanted to figure out which parts of the plane to reinforce so that more survived air fights. They looked at the distribution of bullets on all the planes that came back and tried to armor the areas with a higher concentration of bullet holes.

Abraham Wald caught this instance of survivorship bias and told the US to be looking at the planes that didn’t come back, where they found that fatal bullets hit the engines. So they armored up the engines helping to turn the war.

Ask yourself: Do I have the whole dataset or am I drawing conclusions just based on what is visible? Who is missing from the sample population? What could be making this sample population nonrandom relative to the underlying population?

Association bias

You automatically associate things with painful or pleasurable experiences including liking or disliking something associated with something good or bad

Your assistant is always telling you something’s gone wrong. Due to association bias, you start to associate your assistant with bad feelings, even though the problems aren’t ones they created. Don’t shoot the messenger.

Ask yourself: Am I evaluating this thing, situation, or person based on their track record? Am I encouraging people to tell me bad news immediately? Am I separating the event from the association? Do I like their personality just because they’re attractive? Am I mistaking the person’s appearance for reality?

Disconfirmation bias

You require more proof for ideas or evidence you don’t want to believe

John isn’t convinced in the theory of evolution by natural selection. You keep showing him incremental stages of fossils but he keeps asking you to find fossils at a stage in between. You do this and he continues to ask.

Reciprocation bias

You reciprocate what others have done for or to you

You got coffee with Jerry today and he paid for it. After a few minutes of talking, he invited you to a rally he was going to. You feel an obligation to go even though you don’t want to. After all, he did just buy you a coffee.

Ask yourself: If I’m about to make a concession, what do I really want to achieve?

Memory bias

You remember selectively and incorrectly including being influenced by different word phrasings (i.e. the framing effect)

David’s upset because his girlfriend seems to only remember his mistakes. In a twist, David tends to only remember the negative things his girlfriend says. They both are guilty of memory bias.

You must choose one of two options:

- A 33% chance of saving all 600 people, 66% possibility of saving no one.

- A 33% chance that no people will die, 66% probability that all 600 will die.

People have a tendency to choose the first since it was positively framed. But in reality, they’re both the same scenario. This framing effect was demonstrated by Amos Tversky and Daniel Kahneman in 1981.

Ask yourself: Am I depending on my memory or someone else’s memory or testimony? Am I keeping records of important events?

Absurdity bias

You give a zero probability to events you can’t remember or have never happened

The Turkey is fed by the farmer every day, each day giving him more confidence that he’ll be fed tomorrow with high probability. Then Thanksgiving comes along. This is similar to Bertrand Russell’s chicken problem.

Omission and abstract blindness

You only see things that grab your attention and neglect important missing information or the abstract

Ask yourself: Although I see the available information, what other information could I be missing? Are there other explanations for this? Have I used inversion to turn the situation upside down? Did I compare both positive and negative characteristics? Does my judgement change as a result?

Illusion of transparency

You expect others to know what you mean by your words since you know what you mean by your words

Daniel thought he had explained it to his classmate perfectly. He thinks to himself, “why doesn’t he get it?”

Ask youself: Am I actively culling ambiguity in my words (both spoken and written) since they’re probably more ambiguous than I think?

Planning fallacy

You make plans that are unrealistically close to best-case scenarios

You planned your trip only to find out you didn’t have enough time to do everything you wanted.

Note that the planning fallacy has asymmetric behavior since it skews your predictions to best-case scenarios.

Ask youself: Am I looking at the base rates (the statistics) for how often this kind of plan or forecast has succeeded in the past to create a base line prediction? Am I using specific information about the case to adjust the base line prediction if there are particular reasons to expect the optimistic bias to be more or less pronounced in this plan or forecast than in others of the same type? Did I perform a Reference Forecast?

Social proof

You imitate the behavior of a crowd

John walked by a man crying for help and observed that everyone around the man didn’t seem to care. So John continued walking.

The Bystander Effect is a corollary of social proof.

Ask Yourself: Am I just relying on others decisions because I’m in an unfamiliar environment or crowd, lack knowledge, stressed, or have low self-esteem? Do I want to agree with the group because it’s more comfortable? Am I being pluralistically ignorant because I think nothing is wrong since nobody else thinks nothing is wrong? Am I diffusing responsibility in my decision by siding with the majority because the more people there are, the less personal responsibility I feel?

Reason-respecting bias

You comply with requests merely because you’ve been given a reason

Ask Yourself: Is the reason I’m being given actually a good reason?

Contrast-comparison bias

You judge something by comparing it with something else, instead of judging it on its own

Jessica saw a shirt she kinda liked. She looked at the tag and it was 70% off at a final price of $60. With such a price difference, she thinks it’s such a good bargain and buys the shirt.

Ask Yourself: Am I evaluating this person or thing by itself and not by their contrast?

You can of course use this bias (which marketers do all the time). You can:

- Show a thing you want to sell next to an inferior version

- Sell the expensive thing first, then sell the cheaper stuff afterward since it’ll seem like a bargain

Self-deception and denial bias

You distort reality to reduce pain or increase pleasure

Joe saw that he visibly irritated his friends with they way he phrased some of his comments. He thought to himself, “they’re just stuck-up, they know it’s true. I don’t know why they have a problem with me, I’m just fine”.

Ask Yourself: Am I telling myself a narrative that saves me pain or gives me pleasure? Is this narrative actually wishful thinking? Am I in denial?

You can, of course, use this bias in good ways. Stoics distort reality by reframing a situation that happened to them in more positives terms. The difference here is that they know it’s a reframing or distortion; you get in trouble in you conflate the distortion for reality.

Deprivation syndrome

You strongly react when something you like or have is taken away or threatens to be taken away. You also value more what you can’t have (this includes scarcity)

Ask Yourself: Do I want this for emotional or rational reasons? Why do I want this? Am I placing a higher value because I almost have it and am afraid to lose it? Do I only want it just because I can’t have it? Do I only want it just because it’s rare or hard to obtain? Do I want it because other people want it?

Endowment effect

You value the things you own more than those same things if you didn’t own them

Ask Yourself: Am I holding onto this thing when it’d be cheaper to just let it go and buy it again in the future if required? Am I placing more value on this thing than if I didn’t have it?

Consistency bias

You are consistent with your prior commitments and ideas even when acting against your best interest or in the face of disconfirming evidence

Sarah agreed to take her and Susie’s kids to school. After a couple days, Susie asked if Sarah could do it again. Since Sarah did it before, she agrees with more ease to do it again.

Ask Yourself: Do I already have a public, effortful, or voluntary commitment? Is this commitment inline with what I want to be doing? Do I really just need to cut my losses? Am I falling for the low-ball or the foot-in-the-door technique?

Of course, you can use this bias in more sinister ways by getting someone to commit to something, even if small, so that they’re more likely to agree to do it again in the future and even like you more as a result.

Ben Franklin did something similar with a man who didn’t particularly like him. He asked the man to borrow a book and he agreed. After a few times doing this, the man found himself in a more giving attitude toward Ben which helped smooth their relationship.

Self-serving bias

You have an overly positive view of your abilities and future. This is over-optimism.

Ask Yourself: Am I overestimating my ability to predict or plan for the future? How can I be wrong? Who could be qualified to tell me I’m wrong? Have I looked at the track record instead of trusting my first impressions? Am I only looking at the successes in the track record - confirmation bias?

Confirmation bias

You only consider evidence that confirms your beliefs

Ask Yourself: Do I already have a belief about the subject? Am I looking for reasons that support my belief?

Familiarity bias

You prefer things that are more familiar

Ask Yourself: Am I wanting to do this thing only because I’m more familiar with it and this makes me comfortable?

Loss aversion

You find losses more painful than gains are pleasant

Ask Yourself: Am I worried about failing even though the upside of the risk is greater and more likely?

Status-quo bias

Loss aversion + familiarity bias

Anchoring effect

You give too much weight to initial information and this influences your decisions

Ask Yourself: Did I already have a value in mind? Am I being careful that this value doesn’t affect my estimation of the quantity? Am I making choices from a zero base level and remembering what I want to achieve? Am I adjusting the information I have to reality? Is the question/situation being framed for me and influencing the information I pay attention to or conclusions I make - bias from omission and abstract blindness?

Availability bias

You want to estimate the frequency or size of an event but instead give an impression of the ease with which instances come to mind

Ask Yourself: Was it easy to recall how often this thing happens or how big this thing is? Is this thing representative evidence? Is this thing a random event?

Affect bias

You make decisions or judgements by consulting your emotions

Ask Yourself: Am I making a decision while being angry, upset, sad, depressed, or excited?

Solution aversion

You deny problems and any scientific evidence supporting the existence of the problems when you don’t like the solution

Hindsight bias

You change the history of your beliefs after observing the outcome

Even though he was a little shaky about his predictions during the game, after the game he shouted “I told you I knew they’d win!”.

Ask Yourself: Did I think the outcome was obvious? If so, can I show it was obvious by referencing a Decision Journal entry that contained my prediction of the outcome? Am I giving too little credit to people who make good decisions even though it appeared obvious after the fact (outcome bias)?

Outcome bias

You blame decision makers for good decisions when the outcome is bad and give them too little credit for successful decision that appear obvious only after the fact (hindsight bias)

Ask Yourself: Did I blame them for a bad outcome even though the decision was good?

Simpson’s paradox

You see a trend in a groups of data, but the trend disappears or reverses when combined

A great example of this was the UC Berkeley gender bias case. Data from a high level suggested there might be bias in the admittance rates towards men. But when you look at how male and female applicants apply to each program, the assumed bias actually flips.

In short, men were applying to programs that had a high overall acceptance rate while women were applying to departments with lower overall acceptance rates. This guaranteed an overall lower acceptance rate of women even though they might have been favored across multiple departments.

Base rate fallacy

You make decisions with specific information and forget to include the base rate (i.e. general) information

In 1995, the UK Committee on Safety of Medicines issued a letter to doctors that said there were many next-gen oral contraceptives that doubled the chance of blood clots. Consequently, many women stopped taking birth control and unwanted pregnancies increased significantly.

But the media forgot to mention base rates - the likelihood of getting blood clots in the first place was 1 out of 7,000. Third-gen contraceptives doubled this to 2 out of 7,000 - still a very small number.

Conditional fallacy

P(A|B) != P(B|A)

The probability that an animal is a dog given it has fur is not equal to the probability that an animal has fur given it’s a dog.

The Prosecutor’s fallacy is relevant here as there have been many times in court where probability was misapplied

Conjunction fallacy

P(A and B) <= P(A) and P(A and B) <= P(B)

Classical example from aforementioned Amos Tversky and Daniel Kahneman:

Linda is 31 years old, single, outspoken, and very bright. She majored in philosophy. As a student, she was deeply concerned with issues of discrimination and social justice, and also participated in anti-nuclear demonstrations.

Which is more probable?

- Linda is a bank teller.

- Linda is a bank teller and is active in the feminist movement.

Most people choose number 2 but probability theory guarantees us 2 can never be more probable than 1.

Recommended resources

- Seeking Wisdom by Peter Bevlin

- Poor Charlie’s Almanack

- Thinking Fast and Slow by Amos Tversky and Daniel Kahneman

- How Not To Be Wrong by Jordan Ellenberg

[1] Feature image provided by xkcd